If you’re using Kubernetes to deploy your applications, you’ll be well aware of all the scale, cost, and reliability benefits k8s brings. Likewise, autoscaling your CI/CD build agents on Kubernetes pods ensures your CI infrastructure is adequately utilized, and your workloads picked up and processed without delay.

This blog examines some best practices for running CI/CD on Kubernetes. We’ll cover some key security, observability, frugality, and flexibility concerns to consider.

Security

Security is job number zero, things have to be secure. It's relatively simple to have things “just work” as an admin, with everything having access to everything. But when you're going to run a CI/CD workload on a Kubernetes cluster, things have to be locked down.

The principle of least privilege is well known, and it certainly applies to Kubernetes clusters. You’ll need to implement role-based access controls, and restrict exactly what access has access to what.

In a VM environment and a VPC, there are a set of commonly known security practices that are followed. But Kubernetes brings its own abstractions, and different layers that all need security. You've got east-west traffic flowing between services—your inter-service communication. In this case, it’s important to make sure services can only communicate with the services you want them to, paying particular attention to making sure there’s no chance for accidental privilege escalation.

Because CI provides remote code execution as a service, at scale, it means you need appropriate guardrails around that ability. You need to make sure that what's happening in your CI environment is exactly what you’ve designed to happen. Having Audit logging means you’re able to validate and prove what you think is happening, is all that’s happening. Audit logging provides visibility into every single action being taken in CI, and in your Kubernetes cluster.

Observability

Audit logs provide a level of observability and security for CI and Kubernetes, but your observability needs will likely be far greater. Having observability into your Kubernetes clusters, CI pipelines, and everything that's happening across the entire CI/CD system considerably reduces complexity, and can provide useful context at a glance (or several glances).

In comparison, getting insight into what’s happening in a VM environment is incredibly easy. We can quickly jump onto a virtual machine, SSH in and see exactly how things like disk space and memory are faring. It’s all conveniently at your fingertips!

Kubernetes makes things slightly more challenging. There are multiple layers; the container, the pod, the node, and the resources being used. Having external observability capabilities reduces complexity, so you can get answers faster—without needing to SSH into nodes directly. Good observability means you’re able to identify when build agents are disappearing, and see when things are changing unexpectedly, to stop the ground from shifting out from beneath your feet.

Your systems should be observable and traceable over time, so you’re able to observe trends, and be able to look back and ask what’s happened in the last 30, 60 or 90 days, or this week, or last week. You’ll also need a view of your entire ecosystem, so you’re able to look at things from 50,000 feet.

Accessing metrics that allow you to measure, and account for the state of your system is also essential. You can understand and compare things like error rates from one month to the next, and be in a position to track whether things are getting better or worse. Metrics mean you can set system SLOs, and measure how you’re tracking towards them. Observability provides high level understanding of trends and patterns over time, as well as insights into performance issues, leading to more granular interrogation. There’ll be times when you’re led right down to the trace level view, digging in the dirt in an attempt to understand that outlier—a pipeline that ran for six hours longer than expected, looking at the granular details, like what the environment variables were set to.

Consider how your team is likely to use metrics and Kubernetes CI/CD workload cluster logs. Two different types of data your team will need:

- Trace data: Aggregated metrics that show trends over time

- Log data: All of your cluster workload logs used for for deep-dives and debugging

Separate your readily accessible trace level data and your logs into two different buckets. You don’t need trace level data for all your hundreds of thousands of gigabytes worth of logs, if you do, you should expect a very large bill. To keep your monitoring and analytics tool (aka Datadog) and cloud provider costs in check carefully consider how you retrieve and store this data. Sample your readily accessible trace level data, rather than streaming every single bit, and keep this data separate from your bigger, slightly slower-to-access log data. You can stream and/or sample your logs into something like Elastic’s elastic stack (a.k.a. ELK) which allows for incredible searchability when troubleshooting an issue.

Frugality

On the subject of big bills, frugality is a major focus for teams responsible for managing infrastructure. You will want to be accountable for keeping any Kubernetes cluster costs in check. If you’ve come from hosted CI/CD, or self-hosted CI/CD in a VM environment, you'll be excited by the prospect of just how fast Kubernetes is going to be—pods will spin up and spin down faster, resources can be controlled more effectively, everything will be in your control. It is going to be cheaper to run there's no denying it. Running CI/CD on Kubernetes means your platform is now lightning fast—lending itself to ultra-parallelism, and is super responsive, so you’ll find your engineers will be using it more. The downside of removing developer frustration and making CI/CD easier and more reliable is that your cluster costs will increase, because there is increased usage.

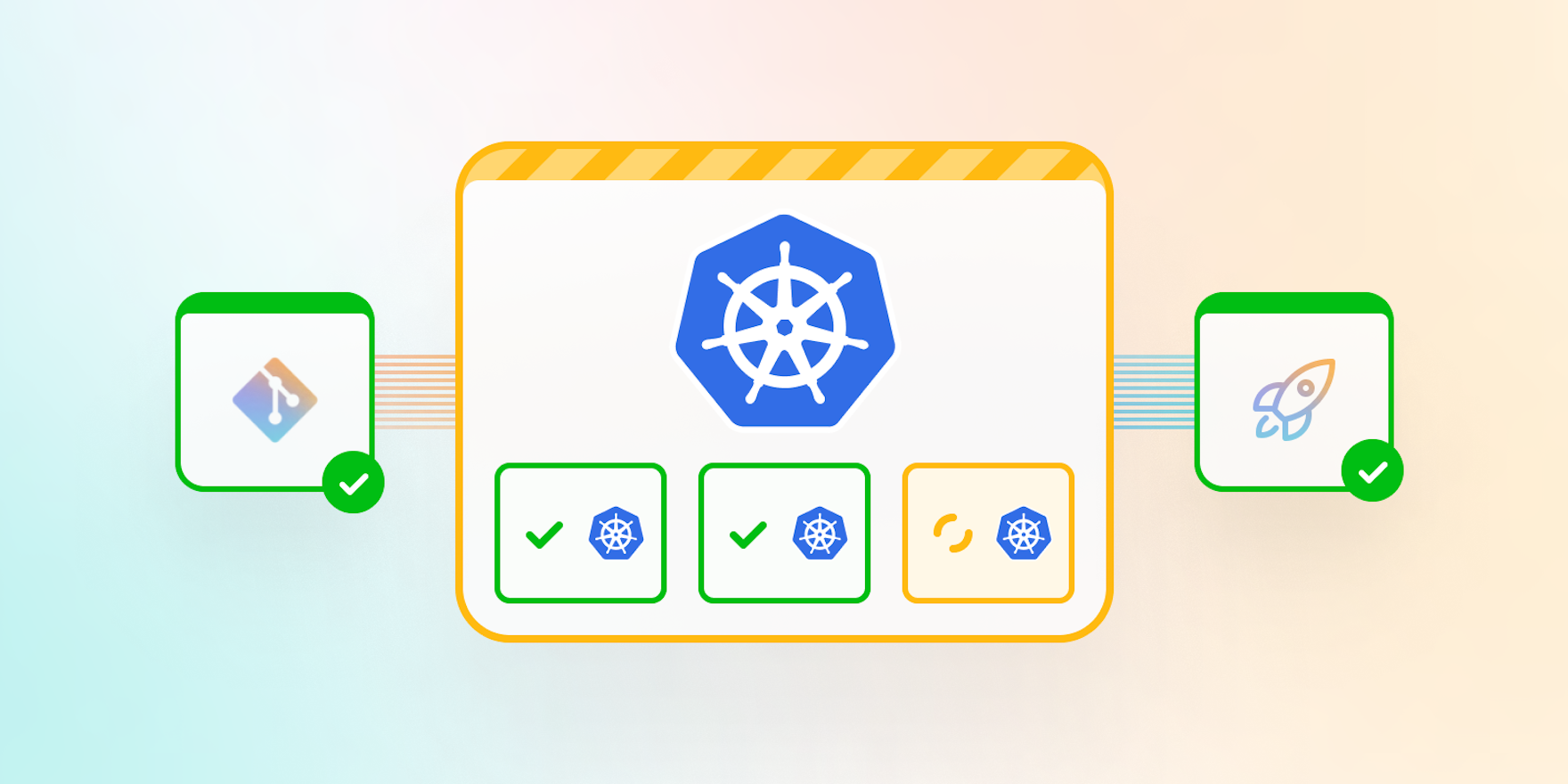

For accountability, cost, and security reasons, you will want to seperate your clusters. You don’t want your CI cluster to be your production cluster, they should be isolated. That way you can clearly account for what you're spending on CI/CD, versus what you're spending on production traffic.

Consider having three separate clusters for build, test and deploy, understand how much you're spending on each and put appropriate controls in place. Leverage spot instances, or use different instance sizes for different purposes. Use smaller instances for build and test jobs in CI, mid-size instances for deploys, and reserve the souped up 64 core, 32 gigs of RAM beasts for your production clusters—AWS EKS lets you manage this for your clusters. On the Kubernetes side, you can set resource limits for CPU and memory to really maximize your compute utilization in CI.

Kubernetes, when compared to a traditional VM environment for CI/CD, allows you to be more frugal with ability to configure how clusters and resources are used at a granular level. It’s totally feasible to have fast, responsive, and reliable CI/CD that’s also cheaper.

Flexibility

When you weigh up all the benefits you get from running CI/CD on your Kubernetes clusters, flexibility isn’t the biggest, but it’s definitely up there! VMs are ephemeral, but in Kubernetes clusters you have ephemeral pods spinning up automatically, and your ability to parallelize dramatically increases. You now have the ability to completely rethink how you handle your CI/CD workloads, and how your pipelines run.

Consider your CI/CD on clusters as an event-driven system. It’s no longer a collection of bash scripts running in sequence on the same host. In this new world, how could you model your build, test and deploy jobs? How could you parallelize?

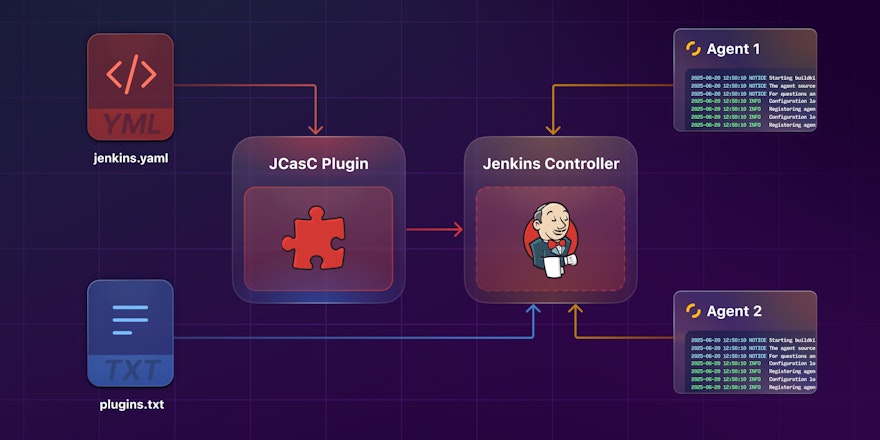

These open source options offer even more flexibility for your Kubernetes clusters:

- Kustomize – a template-free way to customize your Kubernetes configuration.

- Helm – a package manager that helps you define, install and upgrade even the most complex Kubernetes applications. A CNCF project maintained by the Helm community.

- Flagger – an operator that provides the ability to automate releases using different deployment techniques.

Kubernetes has a strong, thriving open source community creating and supporting complimentary tools. Use them for customizing your clusters, and for how you do deploys, whether that’s rolling, canary, or blue/green.

Always apply the 80/20 rule when considering potential tooling and platforms. You don’t need the perfect tool for deploys, or CI/CD, but if you can get 80% of the way there, you can customize the remaining 20 so it meets your unique needs. Creating your own custom glue code is a worthwhile, and comparatively small investment if it means you can develop processes and conventions that work for your team’s needs. The flexibility gains are huge, when compared to the relatively small investment, especially when this is confined to the most critical part of your software delivery lifecycle, deployments. That’s truly a best practice when you're running CI/CD on Kubernetes.

Conclusion

Kubernetes offers incredible potential for scale, cost reduction and increased reliability for running your CI/CD workloads. By considering and applying a handful of these best practices you’ll have reliable CI/CD that truly works for your needs. Your Kubernetes cluster will be secure, observabile, and adequately utilizing compute resources with significantly reduced overheads.

To try out these best practices, and run your own fleet of CI/CD workers or agents on Kubernetes, check out our beta open source Buildkite agent stack for Kubernetes. It works on any Kubernetes cluster, and installs via a helm chart.