When we released our MCP server earlier this year, we weren’t entirely sure what to expect. The MCP standard had been out for a bit, MCP servers seemed to be sprouting up everywhere, and we knew our users would want one, given how many of them were already weaving LLMs into their workflows.

What we didn't know was how they’d use it. What would they build with it? Where would they run it? How would it perform? What would we learn?

Once we shipped and open-sourced that first version, we saw several of our customers pick it up and start using it for real workloads, so we paid close attention — and the learning came quickly:

- Using AI agents in CI/CD workflows can be complicated. Debugging failures often requires sifting through build logs, and build logs can be huge; you can’t just throw a 50MB log at an agent and expect it to go well. Given their size, and how packed they often are with irrelevant noise (tool installs, verbose output, distracting warnings), build logs not only waste tokens, they can blow through context windows and overwhelm AI agents, producing low-quality results.

- Managing local MCP servers can be painful. Dropping a call to

docker runinto a config file to get up and running might seem convenient (and it's how most MCP servers still work), but configuring those servers with API keys, keeping them updated, etc., adds a layer of maintenance overhead (not to mention security risk, with all those API keys floating around on developer machines) that most users would rather avoid if they could. - MCP tools aren't just for AI agents, they're also for humans. An effective MCP toolset doesn't just proxy a REST API function-for-function. It bridges the gap — sometimes wide — between what's technically possible with an API and what a human can reasonably achieve by successfully prompting an AI agent to do what they want — which isn't always as easy as you’d think.

Over the last few months, we’ve pulled all this learning into a handful of updates that we’re excited to share with you today — all aimed at making your experience of using the Buildkite MCP server even better.

What’s new in the MCP server?

We’ve made a ton of improvements across hundreds of commits since that first release, but the ones we’re sharing with you today fall into three categories:

- A new, fully managed remote MCP server, secured with OAuth

- Dramatically more efficient log fetching, parsing, and querying

- Specialized tooling for monitoring running builds

A new, managed remote MCP server, secured with OAuth

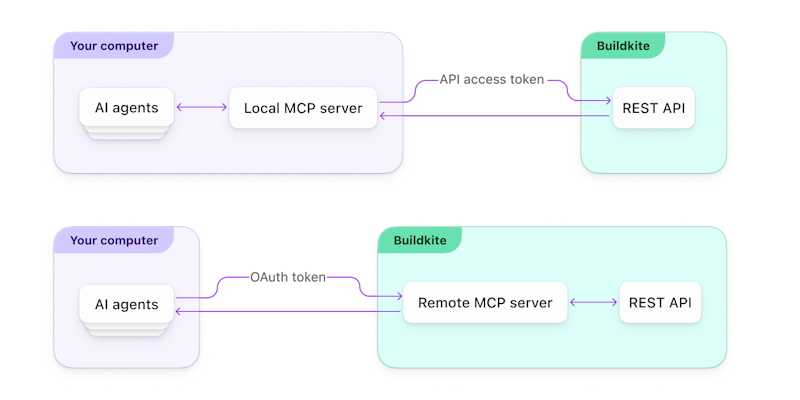

In addition to our local MCP server, which is installable through Docker, Homebrew, or as a platform-native binary, we now have a fully managed remote MCP server that’s publicly available and requires no installation at all.

The local and remote versions of the Buildkite MCP server.

Designed for ease of use, the remote MCP server exposes the same set of tools available in the local MCP server, only without the need to install anything locally or obtain (and then properly handle) any long-lived API access tokens. Just configure your AI tool to add a new http server with the following URL, and let your AI tool’s built-in, browser-based OAuth flow handle the rest:

https://mcp.buildkite.com/mcpFor example, to add the MCP server to Claude Code using the claude CLI, you’d run:

claude mcp add buildkite --transport http https://mcp.buildkite.com/mcp To add it to VS Code, you’d add this block to your MCP servers list:

{

"servers": {

"buildkite": {

"url": "https://mcp.buildkite.com/mcp",

"type": "http"

}

}

}Many more tools are supported — see Configuring AI tools in the remote MCP server docs for details.

By default, the OAuth login flow issues a short-lived access token that grants read-write access to the Buildkite platform and enables the full set of MCP server tools. If you’d prefer to lock things down a bit more, there’s also a read-only endpoint that limits the tools the read-only set:

https://mcp.buildkite.com/mcp/readonlyOAuth tokens are valid for 12 hours and refreshed automatically for up to seven days.

Some scenarios may still be better suited for the local MCP server, so for these, the local MCP server remains a fully supported option. The local server also supports some additional configuration that the remote server doesn’t, like customizable toolsets, configurable caching options (more on this below), integration with 1Password (for safer handling of API tokens), and more.

To learn more about both server types, see the MCP server overview in the docs.

More efficient log fetching, parsing, and querying

We’ve also completely reworked our log-fetching, parsing, and querying tools to make them much better at handling text-heavy operations — and much more respectful of your context and token budgets.

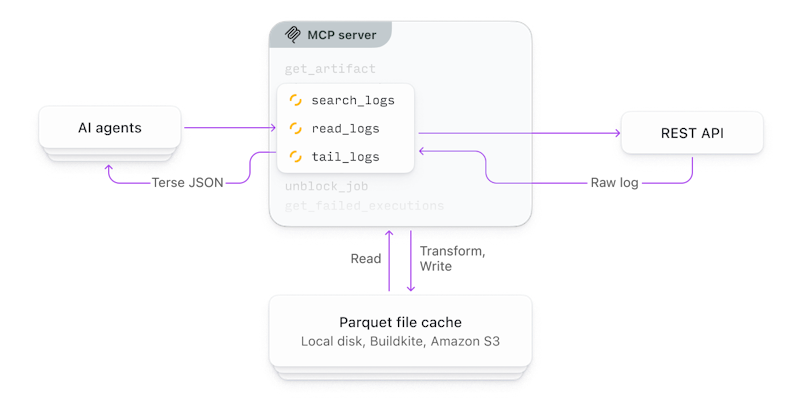

When you prompt your AI agent with a request that implicitly or explicitly calls for build logs, the MCP server now fetches those logs and immediately converts them into Apache Parquet format and caches them before returning a much more focused result to the agent. Subsequent requests for the same log are pulled from the cache and returned in milliseconds, rather than seconds or tens of seconds.

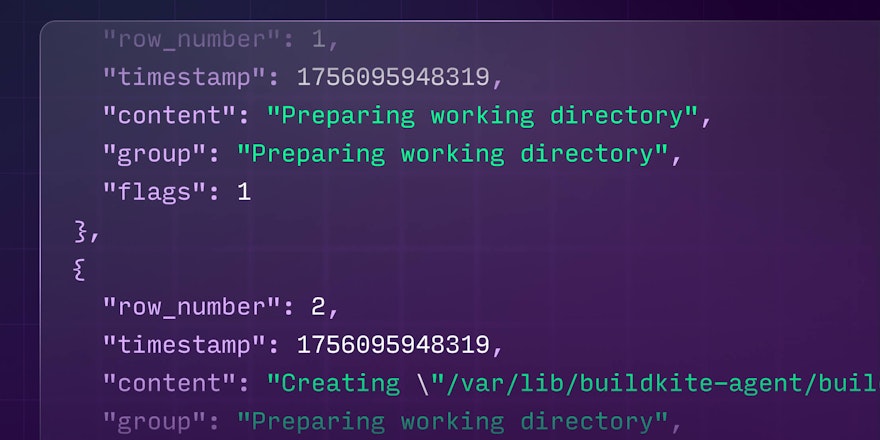

Logging tools in the MCP server. Logs are transformed into Apache Parquet format and cached for optimal performance and queryability.

For example, rather than simply return a full build log like this:

[2024-09-30T10:15:23Z] Starting build...

[2024-09-30T10:15:24Z] Checking out code...

[2024-09-30T10:15:25Z] Installing dependencies...

[2024-09-30T10:15:26Z] npm WARN deprecated package@1.0.0

[2024-09-30T10:15:27Z] npm install completed

[2024-09-30T10:15:28Z] Running tests...

[2024-09-30T10:15:29Z] ✓ test 1 passed

[2024-09-30T10:15:30Z] ✓ test 2 passed

[... thousands more lines of irrelevant output ...]

[2024-09-30T12:42:15Z] ✓ test 1,247 passed

[2024-09-30T12:42:16Z] Starting TypeScript compilation...

[2024-09-30T12:42:17Z] Compiling src/components/Button.tsx...

[2024-09-30T12:42:18Z] Compiling src/utils/helpers.tsx...

[2024-09-30T12:42:19Z] ERROR: Type 'string | undefined' is not assignable to type 'string'

[2024-09-30T12:42:19Z] at src/components/UserProfile.tsx:42:18

[2024-09-30T12:42:19Z] TypeScript compilation failed

[2024-09-30T12:42:20Z] Build failed with exit code 1… the MCP server would return a much terser and more focused JSON response that looked more like this:

[

{"ts": 1727692939000, "c": "Starting TypeScript compilation...", "rn": 44998},

{"ts": 1727692940000, "c": "Compiling src/components/Button.tsx...", "rn": 44999},

{"ts": 1727692941000, "c": "Compiling src/utils/helpers.tsx...", "rn": 45000},

{"ts": 1727692942000, "c": "ERROR: Type 'string | undefined' is not assignable to type 'string'", "rn": 45001},

{"ts": 1727692942000, "c": " at src/components/UserProfile.tsx:42:18", "rn": 45002},

{"ts": 1727692943000, "c": "TypeScript compilation failed", "rn": 45003},

{"ts": 1727692944000, "c": "Build failed with exit code 1", "rn": 45004}

]… using far fewer tokens, and leading to much faster and more accurate results.

Caches are also collaboration-friendly. Users of the remote MCP server get these benefits automatically, with caches stored in the Buildkite platform and then shared across teams without requiring any additional configuration. Users of the local MCP server can configure their caches to use cloud object-storage services like Amazon S3 for similar benefits.

To learn more, see Smart caching and storage in the docs.

Specialized tooling for monitoring running builds

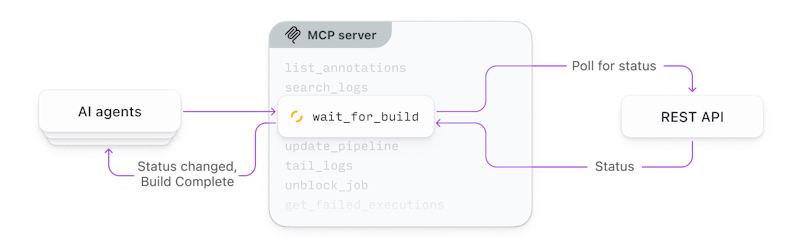

When you’re iterating on a change (or having an agent work on one for you) and pushing updates to CI, you’ll often want your agent to wait for a build to finish before taking some follow-up action — to analyze the result, say, or push a subsequent fix based on the outcome. Agents can usually handle this sort of thing on their own with extra prompting, but without the right tooling, they'll generally poll way too often, hitting API endpoints that return heavy payloads (like in-progress logs), burning through tokens and filling context windows while you wait.

For situations like these, we built a specialized tool, wait_for_build, that encapsulates all of the logic of polling to keep your agents from spinning on needless work. When invoked, the wait_for_build tool blocks on a single long-running operation that manages all polling and response handling logic internally to keep network activity to a minimum and agents from absorbing unnecessary context.

The wait_for_build tool handles polling internally to cut down on wasteful LLM interactions.

Where possible, the tool also streams real-time progress notifications to MCP clients that support it, and when it sees that the build’s reached a terminal or blocked state, it returns the result to the agent.

To learn more, see the Builds section of the MCP server tools documentation.

What can you do with the MCP server?

As of this latest round of improvements, you can now:

- Bootstrap new pipelines easily with tools like

create_pipelinethat make the experience as much like using the Buildkite dashboard as possible. (Demo below!) - Debug and fix build failures more efficiently knowing your agents get only the context they need, with tools like

wait_for_buildthat help tighten the feedback loop. - Onboard new team members quickly with the tools they need to understand complex pipelines by asking questions that lead to clear, complete answers.

- Continuously improve the delivery process by having your agents monitor and analyze patterns in your builds and pipelines and identify opportunities for improvement.

Here’s a quick demo that shows how simple it is to generate a new Buildkite pipeline with the MCP server from your existing codebase:

For a complete list of the MCP tools (and toolsets) we now support and how to use them, see Available MCP tools in the docs.

Bonus: LLM-friendly docs

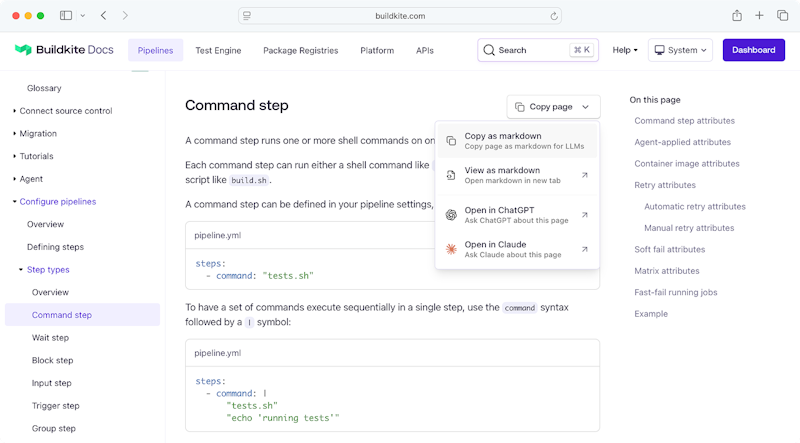

In addition to the MCP server itself, we've also made a bunch of improvements to our documentation to better support your AI-assisted workflows.

Every page of the Buildkite documentation is now available in Markdown format, with on-page controls that make it easy to copy and paste its content into your prompts for your agents to analyze:

On-page controls in the Buildkite docs make it easy to paste into your AI tool of choice.

We've also loaded our docs into the popular Context7 MCP server to give your agents the most up-to-date (and token-efficient!) documentation available. Just install Context7 alongside the Buildkite MCP server, and your agents immediately get access to all of the information they need to produce better, more accurate results, with far fewer hallucinations.

Wrapping up, and next steps

It's been a wild ride this year with the rise of AI agents and MCP, and we're all (collectively, as an industry) learning more every day — seeing what works, what doesn't, what could be better, and where to go next. It's a big reason why we've chosen to build in the open: your feedback, ideas, and contributions all help us improve the experience of using the Buildkite MCP server for everyone.

Whether you're already all-in on agentic AI or just getting started, we'd love for you to give the server a try and let us know what you think. A few suggestions:

- Dive into the MCP server docs to learn about the what you can do with it

- Keep an eye on our YouTube channel for more demos and how-tos like this one

- Follow the MCP server repo on GitHub to get notified of new features and capabilities as they land (we're not slowing down)

- File an issue with your ideas — or better, submit a pull request! We welcome community submissions and we'd love to add your name to the contributors list

Happy building!