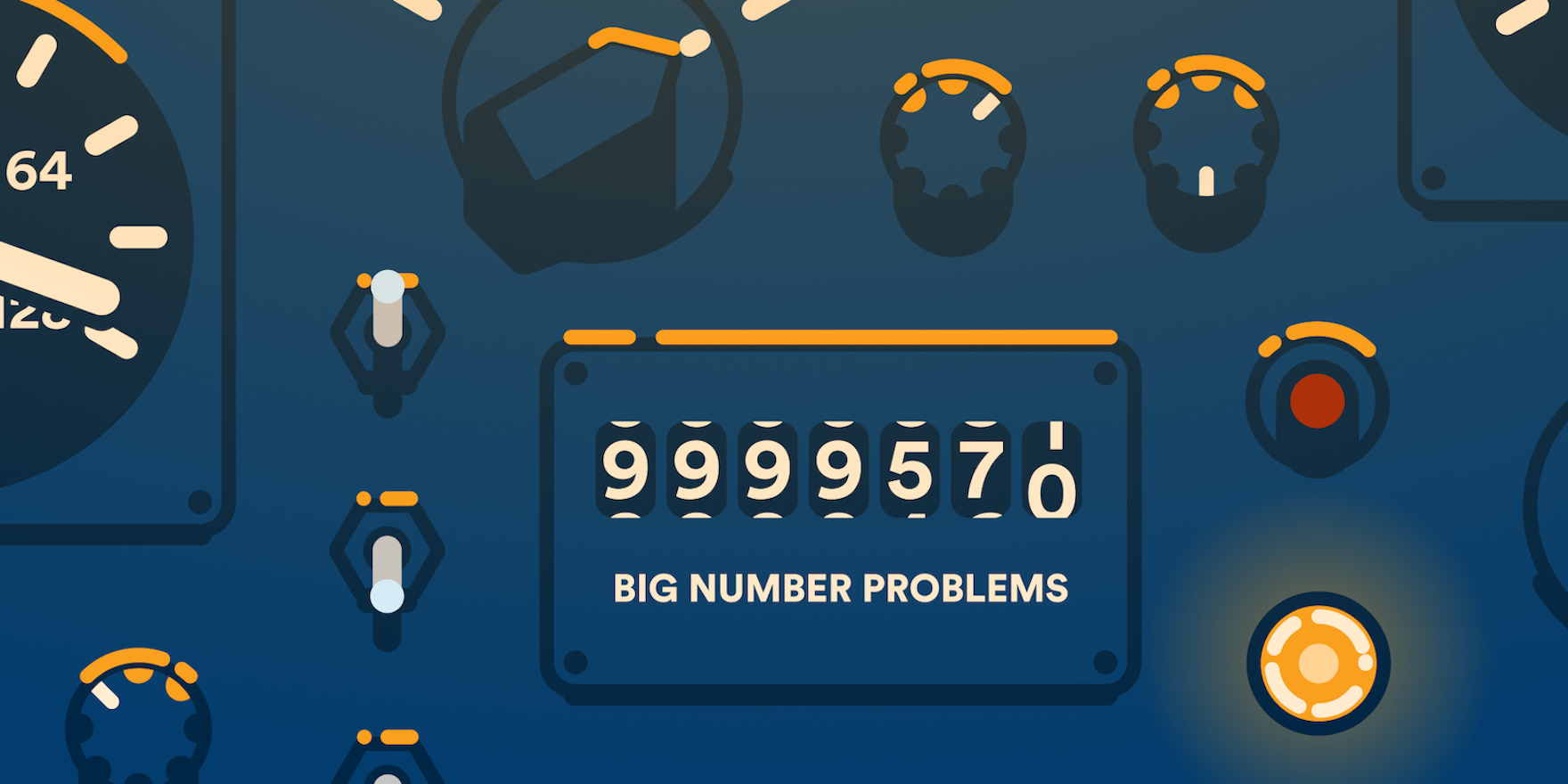

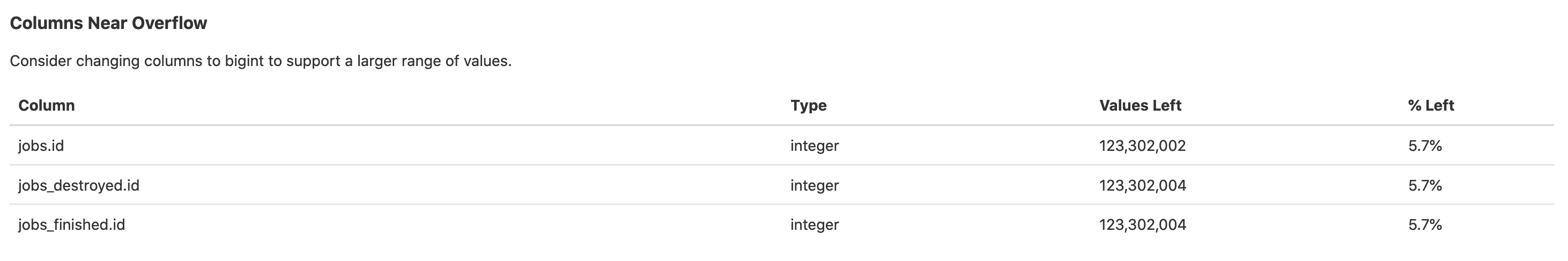

Buildkite is a Rails majestic monolith with a really big PostgreSQL database. Most of our core tables were set up a long time ago and use integer primary keys which was the default at the time. We monitor for integer overflows, and in the middle of September last year it was finally time to tackle one of our largest tables.

There were over 2 billion rows in this table across three partitions covering 8.5TB, plus plenty more rows with foreign keys with the same data type. They all needed to be migrated to the larger bigint type.

Creating a new bigint column

Changing the data type in place wasn’t an option — that would lock and rewrite the tables in place which would require downtime in the days to weeks range. The simplest zero downtime strategy was to add a new column with the bigger data type, start writing to both columns, backfill the new column, and then swap the columns.

So a simplified example of our problem is a table with an integer primary key, like:

CREATE TABLE jobs (

id SERIAL PRIMARY KEY,

name TEXT NOT NULL,

state job_state NOT NULL DEFAULT 'pending',

...

);and a matching ActiveRecord model. Rails inspects the schema of the database to generate methods for each column when the models are first used, so the column we're about to add and the intermediate column we'll use when swapping the new column into place are both ignored so we can change them with zero downtime.

class Job < ActiveRecord::Base

self.ignore_columns = ["id_bigint", "id_integer"]

endWe use ActiveRecord migrations for all our database changes. This strongly versions our database schema and allows us to safely deploy changes incrementally to our database schema. First we'll add a new column, and a trigger to write both columns for new records:

add_column :jobs, :id_bigint, :bigint

execute <<~SQL

CREATE OR REPLACE FUNCTION jobs_copy_id_bigint() RETURNS TRIGGER AS $$

BEGIN

NEW.id_bigint = NEW.id;

RETURN NEW;

END;

$$ LANGUAGE plpgsql;

CREATE TRIGGER jobs_copy_id_bigint

BEFORE INSERT OR UPDATE ON jobs

FOR EACH ROW WHEN ( NEW.id_bigint IS NULL )

EXECUTE FUNCTION jobs_copy_id_bigint();

SQLKeeping it zero-downtime

There's a risk that we'll make a change which takes longer than we expect, and impact production availability. This can be managed through careful use of statement timeouts, to short circuit potential long running migrations interfering with production traffic. For example, we did not add an index above because it requires a full table scan which would take far too long to complete within the transaction that ActiveRecord wraps around every migration. Instead, we make sure to disable the transaction and build the index concurrently in a separate migration:

add_index :jobs, :id_bigint, unique: true, algorithm: :concurrentlyRails continues to function, none the wiser, and we’re transparently writing to both columns. Now the new column can be backfilled for existing records, too. We started a background task, doing roughly:

Job.in_batches.update_all("id_bigint = id")We distributed this across some threads with backoff controls based on database load, and after letting it run for a while we were comfortable that this would complete in plenty of time.

Swapping the columns

Once the backfill was done, it was time to change the primary key. By itself this is a relatively instantanous operation, but it requires that the new column have a NOT NULL constraint. Marking a column as NOT NULL used to require an exclusive lock and table scan, but since PostgreSQL 12.0 the constraint can be added without a scan if the database can prove the column cannot contain NULLs. The best way to do this is with a CHECK constraint, and these can be created and then validated concurrently.

ALTER TABLE jobs

ADD CONSTRAINT id_bigint_not_null

CHECK (id_bigint IS NOT NULL)

NOT VALID;

ALTER TABLE jobs VALIDATE CONSTRAINT id_bigint_not_null;

ALTER TABLE jobs ALTER COLUMN id_bigint SET NOT NULL;

ALTER TABLE jobs DROP CONSTRAINT id_bigint_not_null;We had a few partial indexes on this table including the primary key which are used for index only scans and so we concurrently created equivalent indexes on the new column as well.

Now that the new column is complete, we can make it canonical. The columns are already ignored and so won't interfere with regular operations. So we can safely do a swap, again without downtime beyond a momentary lock on the jobs in the middle of running transactions.

/* Stop writing to the new column, takes an exclusive table lock */

DROP FUNCTION jobs_copy_id_bigint CASCADE;

/* Swap the new column into place */

ALTER TABLE jobs RENAME COLUMN id TO id_integer;

ALTER TABLE jobs RENAME COLUMN id_bigint TO id;

/* Transfer ownership of the primary key sequence */

ALTER SEQUENCE jobs_id_seq OWNED BY jobs.id;

ALTER TABLE jobs ALTER COLUMN id SET DEFAULT nextval('jobs_id_seq'::regclass);

ALTER TABLE jobs ALTER COLUMN id_integer DROP DEFAULT;

/* Replace the primary key constraint */

ALTER TABLE jobs DROP CONSTRAINT jobs_pkey;

ALTER INDEX index_jobs_on_id_bigint RENAME TO jobs_pkey;

ALTER TABLE jobs ADD CONSTRAINT jobs_pkey PRIMARY KEY USING INDEX jobs_pkey;The table is safe! 🎉 We kept writing to the old column as well while we validated that everything went according to plan, but were satisfied fairly quickly and then dropped the old column.

Next steps

Now we needed to tackle the foreign keys.

Some of the foreign keys were small enough that we could do an in place rewrite of the tables with only a momentary pause. Some were bigger, but using the same strategy as the primary key finished relatively quickly. There was one table which was too big, and would take an unacceptably long time to complete, so we came up with something a bit more novel. We'll talk about that in Part 2 — coming soon!

For the few remaining tables in our database still using integer primary keys we have years of runway. We know how to solve this problem when it becomes time. But for now we can focus on improving the stability and performance of our platform.