The software landscape in 2023 is complex. Actually, it’s always been, but we’re really feeling it this year. We work in distributed teams, have monolithic codebases, gargantuan test suites, and microservices that stretch as far as the eye can see. Not to mention our teams are becoming leaner and leaner.

One thing working in our favor is that we have Continuous Integration and Continuous Deployment (CI/CD) at the heart of our software delivery lifecycles. CI/CD allows us to ship code easily and frequently, with a high level of trust that our end users won’t be impacted by bugs (or at least that’s what CI/CD promises to deliver). Sometimes though, our ability to ship without friction is hampered by flaky unreliable test suites, very slow builds, or even simply waiting around for builds to start running. We end up lacking confidence in the system, whose number one job is to provide it.

The pain of flaky tests

A flaky test is a test that passes most of the time but sometimes fails for no immediately obvious reason. Flaky tests are caused by many things, including:

- Test ordering

- Missing elements in integration specs

- Dates, time, and timezones

I began digging into the problem more to understand exactly how painful flaky tests were. Turns out that in one month, Buildkite users spent a cumulative 9,413 days retrying failed steps. That’s 59.24 days wasted every day that month.

To put those numbers into context, you can get to Mars and back 17 times in 9,413 days. Suppose you also factor in time wasted across all CI/CD platforms (and especially ones that make you re-run the entire build for each failure rather than an individual failed step). In that case, we’ve suddenly got time to explore strange new worlds and seek out new life and civilizations at the very edge of our galaxy.

Back when I was a junior developer, there was a smoke test in our pipeline that never passed. I recall asking, “Why is this test failing?” The Senior Developer I was pairing with answered, “Ohhh, that one, yeah it hardly ever passes.” From that moment on, every time I saw a CI failure, I wondered: “Is this a flaky test, or a genuine failure?”

The additional overhead of lost flow (and focus) is real–we become distracted and potentially occupy ourselves with Twitter, X, Bluesky scrolls and Slack messages. According to a Harvard Business Review article, it takes over 23 minutes to get back on task after an interruption. So if we’re playing whack-a-mole with flaky tests while battling mega-slow builds, we’re in a very bad place.

Developers need to be able to rely on the systems and tools they use to get the job done–our CI/CD systems need to provide fast, reliable feedback about the software we’re delivering. When that doesn’t happen, we’ve got some problems, and so do the end users of our software.

SRE principles to the rescue

Regardless of whether you can literally deploy on a Friday or not, asking, “Can I deploy on a Friday afternoon?” is an awesome way to gauge a team’s sentiment about how reliable their pipeline-to-production workflows are. We should all be able to say yes when asked the question, and if we can’t, we have some work to do to restore trust.

It turns out Site Reliability Engineers (SREs) know a thing or two about ensuring our systems are reliable (as the name suggests), so let’s look at some of the principles they use to guide their efforts.

Google’s book Site Reliability Engineering - How Google Runs Production Systems compares DevOps to SRE:

"The term “DevOps” emerged in industry in late 2008…Its core principles—involvement of the IT function in each phase of a system’s design and development, heavy reliance on automation versus human effort, the application of engineering practices and tools to operations tasks—are consistent with many of SRE’s principles and practices."

Site Reliability Engineering - How Google Runs Production Systems

It goes on to say that DevOps could be viewed as a generalization of several core SRE principles to a wider organizational context. And that SRE could be viewed as a specific implementation of DevOps with some idiosyncratic extensions.

DevOps thinking encourages us to see accidents as a normal part of software delivery. We saw blameless culture evolve because of this principle, and also that tooling, human systems, and culture are interrelated. DevOps is a way of thinking and working focused on bringing people together (from Development and Operations), improving collaboration, and leveraging automation and tooling to further improve how we deliver software. SRE, on the other hand, is a little more focused on the practical: improving operational practices, efficiency, and as the name suggests, the reliability of our core systems.

Only as reliable as strictly necessary

"...it is difficult to do your job well without clearly defining well. SLOs provide the language we need to define well."

Theo Schlossnagle Circonus, Seeking SRE

Common principles in both DevOps and SRE involve measurement, observability, and information about the health of systems and services. Whilst SRE works to ensure systems are reliable, 100% reliability is never the goal. SRE seeks to ensure systems are only as reliable as strictly necessary.

SRE uses:

- Service Level Objectives (SLO) to define what level of reliability is guaranteed.

- Service Level Indicators (SLI) to measure how things are tracking against the SLO.

- Error Budgets to reflect how much, or for how long, a service can fail to meet the SLO without consequence.

"SLIs/SLOs shift the mindset from ‘I’m responsible for X service in a very complex, vague backend environment way’ to 'If I don’t meet this SLO my customer is going to be unhappy.'"

Lucia Craciun & Dave Sanders, Putting Customers first with SLIs and SLOs, The Telegraph (Engineering)

We need solid metrics as an objective foundation for conversations in our teams and with leadership, and we need to agree that these metrics represent an accurate picture of reality. If we rely on data to actualize the cost associated with developer pain, it’s no longer about feelings and is far easier to mitigate. SLOs, SLIs, and Error Budgets provide a framework to prioritize the upkeep and maintenance of key systems, which can often be the hardest thing to make time for. SREs generally apply these to production services and systems, but there’s no reason we can’t apply them to CI/CD.

Getting started with SLOs

First, you start with understanding what everyone involved expects from the system, and then you should focus on building a shared understanding.

Ask some questions:

- What is the system in question?

- Is it CI, CD, or both?

- Are you limiting the scope to an application’s test suite?

- What about the test suite? Speed? Reliability?

- Who are the system’s different stakeholders?

- Who relies on the system?

- Who maintains the system?

- What is important to everyone?

- What’s currently working?

- What isn’t working?

- What needs to improve?

Once you have built this shared understanding, it’s time to agree on some SLOs, SLIs, and reasonable error budgets.

For example, if you want to reduce the time developers need to wait for a build to kick off, a good SLO could be:

- SLO: Builds should start running within one minute.

- SLI: Total wait time for a build to start.

- Error budget: 33 builds that take more than 1 minute to start running in a four-week period.

Another example might involve the need to have speedy feedback loops:

- SLO: Developers have commits tested and notified in five minutes.

- SLI: Total build run time.

- Error budget: 33 builds that finish in more than five minutes in a four-week period.

Or you might want to mitigate the problems associated with flaky tests:

- SLO: Test suite reliability should be greater than 87%.

- SLI: Test suite reliability score.

- Error budget: 77 test runs with a reliability score of less than 87% in a four-week period.

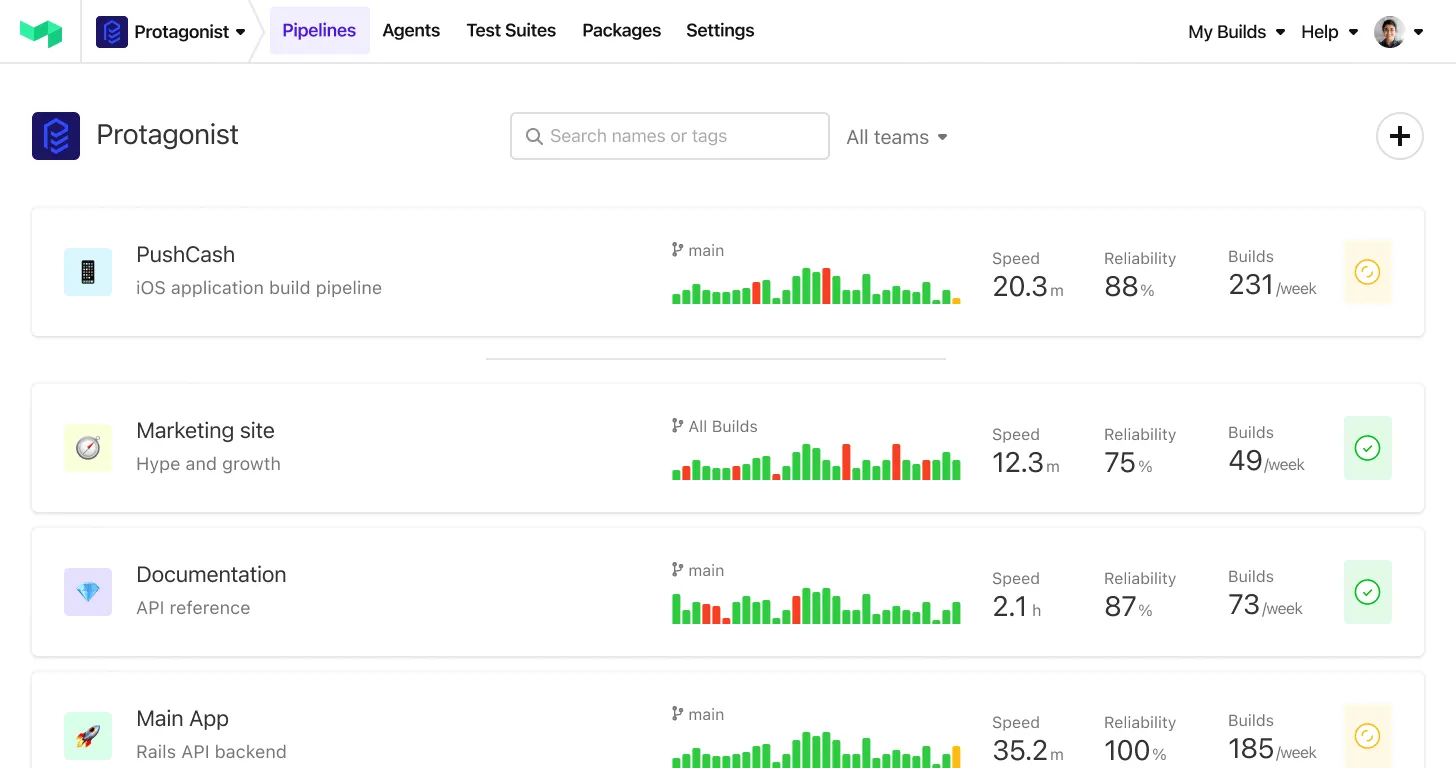

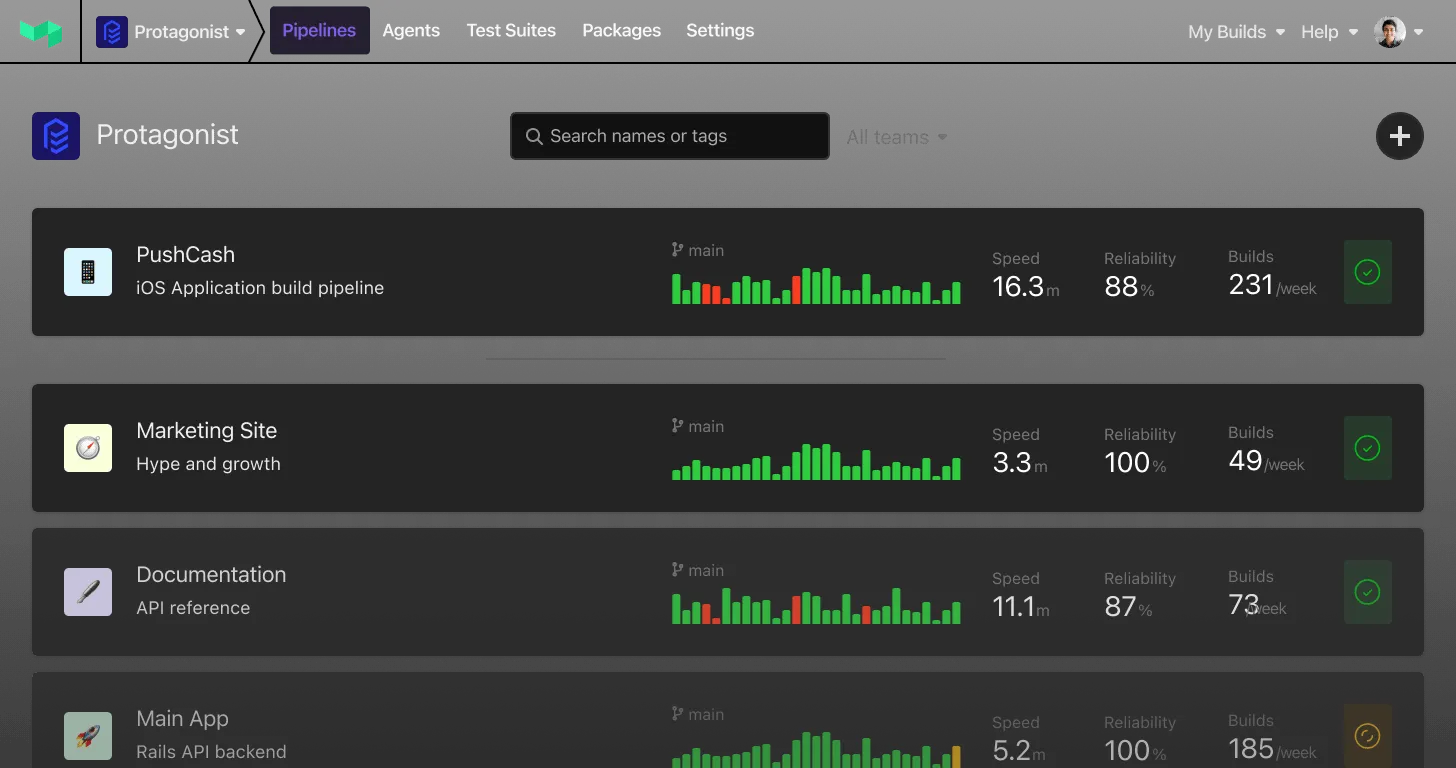

Your SLOs will naturally evolve from understanding what everyone expects from the system. How you get your SLIs will vary depending on what CI/CD platform you use and what metrics you need to collect. For SLIs like build wait time and total build run time, they should be metrics that are available via your CI/CD platform. Buildkite has OpenTelelemetry tracing built into the agent that allows you to send build agent health and performance metrics to an OpenTelemetry collector, a CLI tool to request and build runtime metrics from the API, to be collected and visualized as you need. And for test suite reliability, Buildkite has tooling to detect and manage flaky tests, with a suite reliability percentage score for test suites. Honeycomb and Datadog also have products to integrate with CI tools to gain valuable metrics and insights.

Using error budgets to maintain focus

"SLOs are a powerful weapon to wield against micromanagers, meddlers and feature-hungry PMs. They are an API for your engineering team."

Charity Majors, SLOs Are the API for Your Engineering Team

Let’s look at the error budget in our test suite reliability-related SLO above:

- SLO: Test suite reliability should be greater than 87%.

- SLI: Test suite reliability score.

- Error budget: 77 test runs with a reliability score of less than 87% in a four-week period.

While we are “within budget,” we can maintain momentum and focus on the work we’re already doing, ignoring any issues that may distract us. However, once that budget is spent, and we’ve had more than 77 test suite executions with a reliability score of under 87% in four weeks, we’ll need to have brokered an agreement on what happens. Ideally, your teams would shift focus to the work required to get your SLI back to meeting your SLO.

The perception of grinding to a halt to fix things in a system once an error budget isn’t met can be a huge point of contention when implementing SLOs and SLIs. Since everyone has agreed on what’s important to track, you’ll have SLI metrics in place, so the discussions are centered around hard facts and the visible monetary cost associated with developer pain.

Besides being able to remain focused on our work, in her blog post SLOs Are the API for Your Engineering Team Honeycomb CTO Charity Majors says, “SLOs give you the ability to push back when demands from other parties exceed your capacity to deliver what the business has deemed most important.” This sounds like the kind of thing we need to be able to lean on from time to time.

Conclusion

If you’re not sure how to get started, start small! With one SLO. For that SLO, guarantee to maintain the level of reliability the system currently performs at. That’s a great first step, and you can commit to a better percentage when you’re further along in your SRE practices.

It’s important to remember that SLOs, SLIs, and Error budgets are a journey, there may be dragons, but change is fine, and revising these agreements can happen until they work for everyone. Understand and define expectations, set some SLOs, and prioritize mitigating developer pain to rebuild trust in your system—because everyone should be able to deploy on a Friday afternoon (even if they can’t).

If you’ve tried this approach, let us know what’s worked for you on Twitter X.

This post is based on my talk with the same title: Applying SRE Principles to CI/CD.