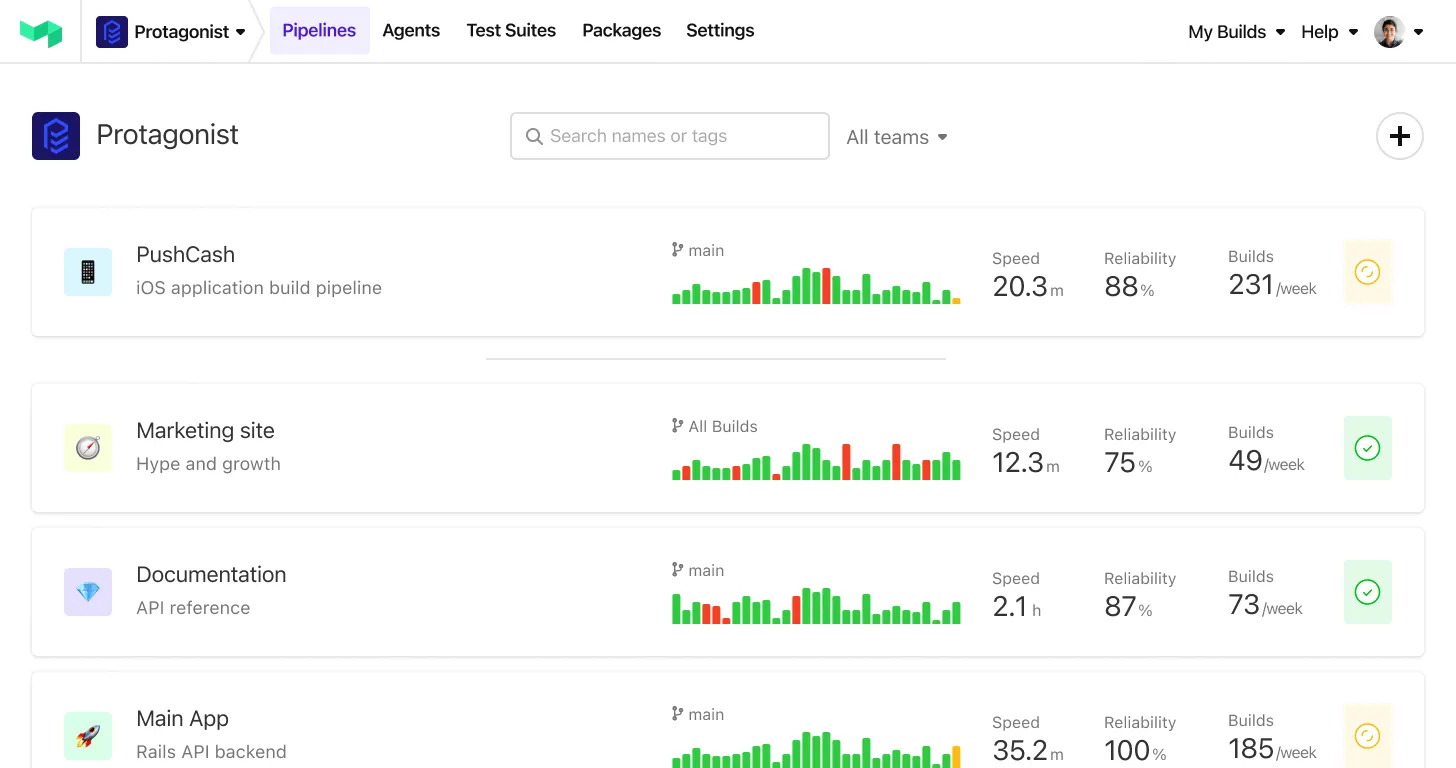

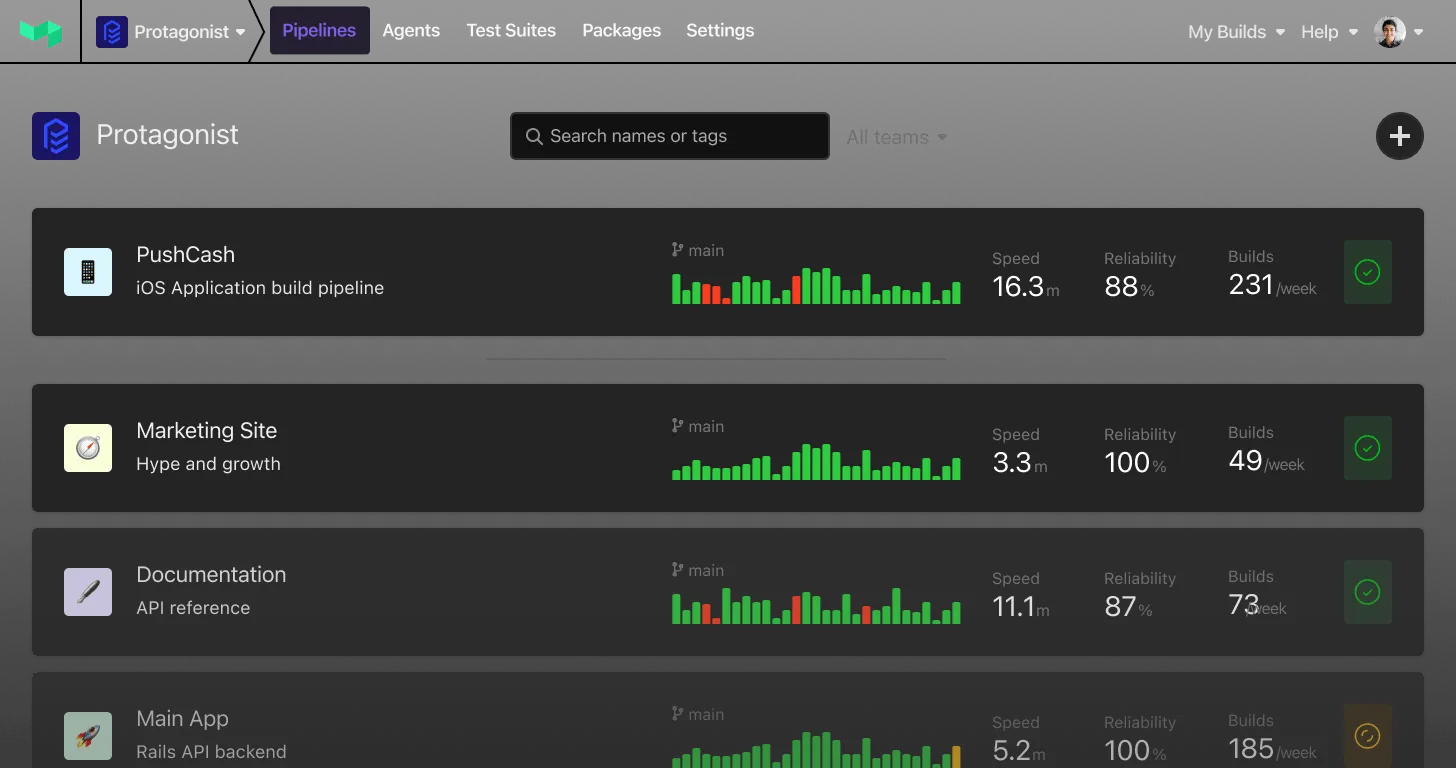

Thousands of software development teams around the world build and deploy their software using Buildkite every day. Their software powers industries and products as different as self driving cars, retail, or software as a service, and we are aware of the responsibilities we acquire being part of their development cycle. In our goal to unblock development teams, having a product that is reliable is our feature number one, and take very seriously any failure to deliver on this promise.

Given those expectations, and the reliability incidents that we experienced in late 2021, we promised we’d undergo a Reliability Review during Q1 2022. The goal was to gain a better understanding of each of those incidents, identify patterns, and get a list of recommendations that could dramatically impact Buildkite’s Reliability. This blog post is a summary of what’s changing in Buildkite taking on board what we’ve learned during the Review.

SLIs and SLOs: What our customers (and we) really care about

We take the observability and performance monitoring of our services very seriously. Every engineer at Buildkite has the tools and skills available to inspect old and new logs, dive deep into any trace of any bug we come across with ample historical context, and any metric we’d ever need.

Two things we hadn’t yet defined as a team were our Service Level Indicators (SLIs) and our Service Level Objectives (SLOs). Service Level Indicators define what metrics that both we, and our customers, really care about. Service Level Objectives define what levels of service those metrics need to maintain so our customers have a satisfactory experience.

Each team at Buildkite has now started defining their SLOs and SLIs, and we are leveraging Datadog to track our first SLOs. Our main dashboards will be no more just a collection of metrics of our individual services, but the current state of the main product metrics our customers care about. This includes third-party services and combinations of individual services metrics.

This will be an ongoing effort, we’ll keep adding more SLOs and also ensure we are accurately tracking real customer impact, but we are already in a much better position than we were a few months ago.

Error Budgets

An error budget reflects how much (or how long) can any service fail to deliver our customers’ expectations without consequences.

This can be seen from two different perspectives:

- Contractual Uptime SLAs: We offer 99.95% uptime to our Enterprise Customers. That means that each month we have a budget of 21m 54s downtime before our budget is exhausted.

- Internal SLOs: Beyond uptime, we’ve defined (as explained before) the levels of services that our customers (and we) expect from our features during a given period of time. If our services fail to meet those expectations the budget will be exhausted.

What happens when an Error Budget is exhausted? This is the biggest change we’ve made, and the one I’m happiest to announce: When Error Budgets are exhausted, the relevant teams will stop any ongoing feature work and move into Reliability roadmap work until the affected SLO/SLA starts performing at the level our customers expect. Everyone at Buildkite was on board with this, and I’m proud of it.

Cloud footprint

All our infrastructure runs on AWS, in a single region, and until recently, in 2 availability zones.

The Reliability Review identified this as a low-effort/high impact change we could act on immediately, and so we did: Since mid-February Buildkite started operating from a third availability zone, and it’s working as expected. We now have higher resilience to AWS single AZ incidents.

A multi-region plan was drafted and estimated but was deprioritised in lieu of other more impactful initiatives.

Deploying when Buildkite is down

Similarly to many of our customers, the Buildkite Engineering team uses Buildkite itself to deploy our code. Things get spicy when we need to deploy a mitigation for an incident while our main deployment tool is operating at downgraded performance, or not working at all.

We have a backup tool, but it leaves too much room for human error and not every engineer at Buildkite knew how to use it. Things were not going to get any better considering we’ve doubled the size of the team in the last few months and are going to double it again soon, so we underwent a documentation/learning exercise so engineers feel safer using the tool.

This is not a completed task, there are still related roadmap items, but we are already in a better position again.

Primary Database

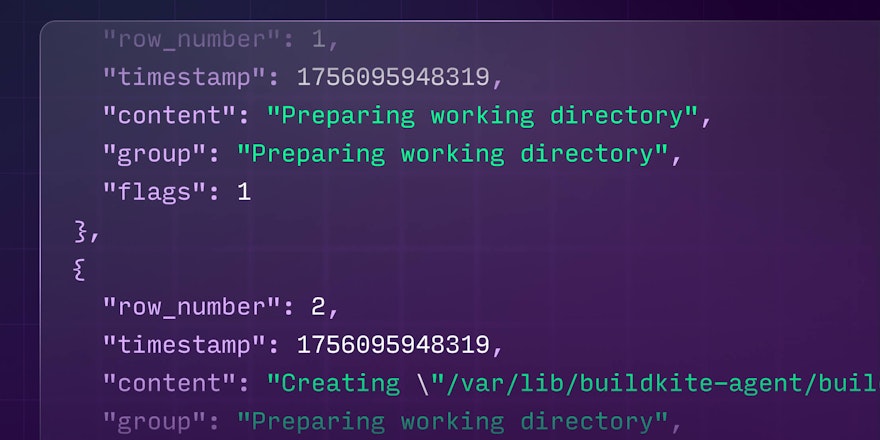

Our primary Postgres database on RDS powers all of our components (Web, API, Rest API, GraphQL API, Agent API, Notifications). It’s our largest single point of failure, with the entire system affected and all customers experiencing degradation or unavailability when database load changes unexpectedly. Contention among requests locking builds and jobs was a recurring issue in the pattern of reliability incidents we experienced in November and December 2021, causing high amounts of lock wait time that consumed our capacity waiting for up to 60 seconds for requests to timeout.

Its increasing size (north of 40 terabytes) is not only limiting our runway but also exponentially complicating how easily we can operate our platform and do things like running zero downtime migrations in an easy way.

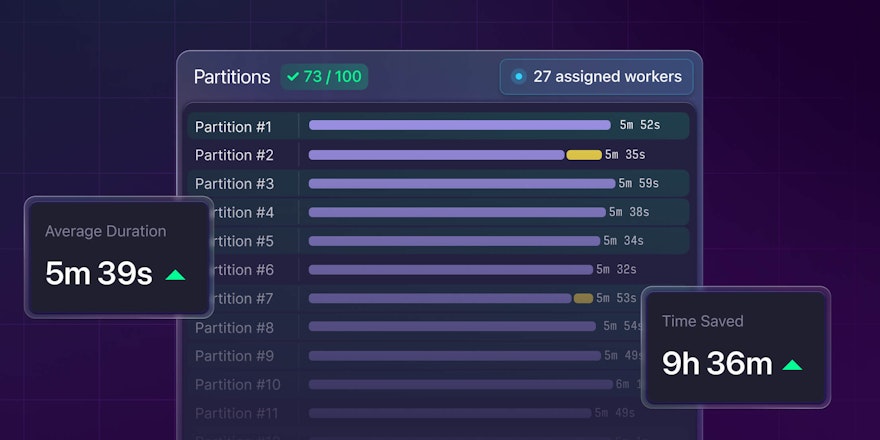

With the assistance of our AWS partners, we are already working on a potential migration to Aurora, which by the looks of it will require something we already have ongoing: partitioning of our largest tables. Deletion of old data is an ongoing investigation that could also alleviate the pressure.

A big chunk of the roadmap for our Foundation Team is database shaped, and we are hiring more Senior Database Engineers to accelerate it. Please reach out if these are the types of problems you enjoy solving at scale.

Are we done yet?

Our lowest uptime figure for February across all our services was 99.98%, the highest since August last year, and we hit 100% (pure) uptime during March, but we are far from done, this is only the beginning. Reliability is not something we consider will ever be “done”, it’s going to be an ongoing effort, because we’ll always want to do better, even though it’ll get harder as we continue growing.

We’ll keep broadcasting lessons we learn and changes we make around all things reliability, to bring all our customers and friends along this journey.