CI/CD pipelines certainly increase the reliability and speed of deployments, but as your team and codebase grow, builds can often slow down significantly. Sometimes they even grind to a halt as the system struggles to handle the increased load.

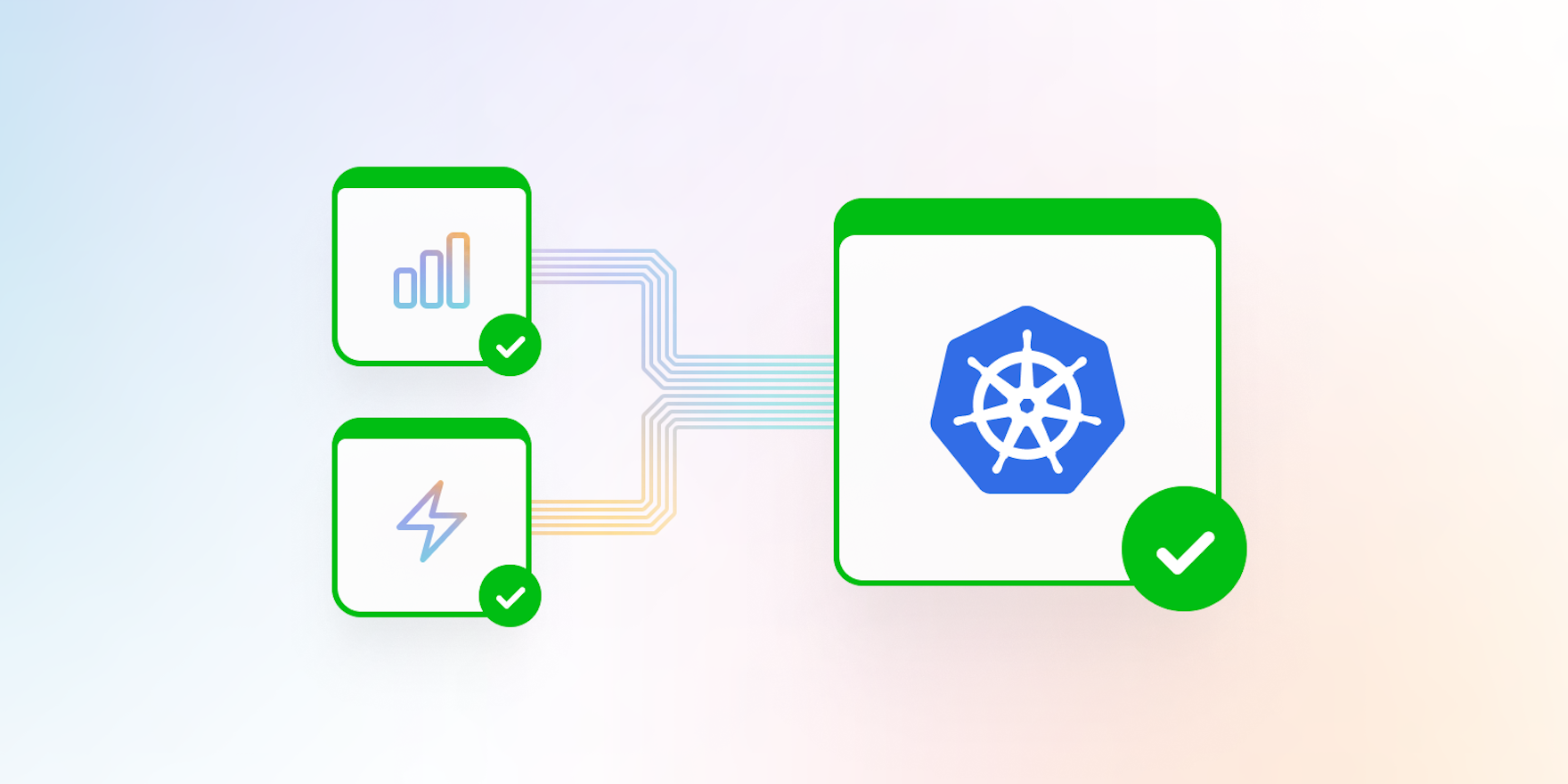

Kubernetes is ideal for automatically scaling your build agents to meet this increased demand. In this blog, we’ll look at how you can optimize your build processes by using Kubernetes to power your pipelines to production.

Why Kubernetes for CI/CD?

As an architecture for orchestrating containers, Kubernetes is well known for managing dynamic workloads of any size, automatically scaling up or down to respond to changing needs. Its self-healing capabilities mean containers can automatically restart if they fail to meet the criteria specified for a healthy functioning instance. Its out-of-the-box features offer everything needed to run containers reliably, so you can avoid a hand-rolled, custom solution. You might already understand the benefits of using Kubernetes for production applications, but if you have large, centralized CI/CD pipelines that serve many teams, there are also significant advantages to using Kubernetes to run your CI/CD workloads as well.

Responsive build scaling

The biggest benefit of using Kubernetes for CI/CD is its ability to scale. As your team and your Git repositories grow, so does the number and duration of builds. It’s common for builds to take longer and longer to finish as time progresses. Problems on the branch can stack up, causing immense frustration and complications for developers trying to merge changes. This fact becomes a blocker, halting releases—especially if you’re using a single, shared pipeline for multiple teams, such as with a monorepo.

Kubernetes facilitates reliable systems that can handle traffic spikes to guarantee uptime and productivity. Containers can spin up in a matter of seconds compared to VMs, which take multiple minutes. Scaling options such as replication controllers, load balancing techniques, or schedulers mean Kubernetes can scale horizontally to respond to user traffic, as well as compute or application requirements. Out-of-the-box networking capabilities allow multiple new pods and nodes to spawn in their own clusters to create stronger boundaries around environments, applications, or services.

Container images are composed of multiple layers, with each layer representing a filesystem change. These layers are stacked upon each other, and the final image is a collection of all these layers. Using smart layer caching makes rebuilding a build agent container extremely fast, because only the layers that have changed need to be rebuilt.

In CI/CD, running containerized build agents on Kubernetes means less time spent waiting for builds to kick off, and finish. By running multiple containerized build agents in parallel on a Kubernetes cluster, more builds and tasks can run simultaneously, circumventing the bottlenecks when deploying at scale.

Simple rollbacks for infrastructure deployments

The ability to roll CI/CD infrastructure back is essential when making changes that don’t go to plan. By default, Kubernetes stores all of a deployment's rollout history in the system. This makes rolling back to a previous stable version easy, ensuring systems are highly available with minimal disruption to users.

Consolidated tooling

If your team already uses Kubernetes for production applications, re-using it for CI/CD makes sense. You can utilize existing expertise and knowledge, and consolidate your internal tooling. You’ll have consistent infrastructure, shared Infrastructure as Code definitions and components, and if you're monitoring your production cluster you can easily plug in and monitor your CI/CD Kubernetes cluster as well.

Consistent tooling means less cognitive overhead, greater opportunities for engineers to move around the organization, and improved efficiency.

Avoid vendor lock-in

If you’d like to design for flexibility, using containers that can run on any cloud platform with container runtime support is a wise choice. The popularity of Kubernetes means all major cloud providers have their own Kubernetes hosting solutions, which can help keep your CI/CD workloads as portable as possible, making any move to a new environment easier.

Switching cloud providers can be advantageous (and sometimes necessary) for a number of reasons, including cost reduction, platform support, tooling integrations, or simply better performance—so never say never! Kubernetes can help you keep your future options more open than closed.

How to optimize your builds with Kubernetes for CI/CD

How do you go about creating an optimal Kubernetes-powered CI/CD deployment pipeline? Here are a few best practices to follow.

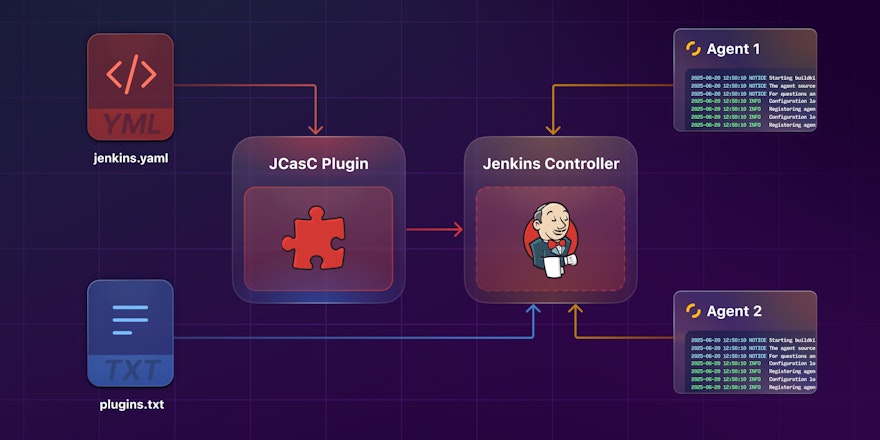

Use Infrastructure as Code (IaC) and versioned deployments

Infrastructure as Code has changed the way we manage deployments, and is the new normal for many organizations. Change processes evolve easily around checked-in, version-controlled configurations that define the infrastructure and environments on which we run our software. The workflow is familiar to developers:

- Request a change.

- Have the proposed change reviewed.

- When approved, apply it.

The audit trail of versioned, signed changes makes governance and compliance rules easier to enforce—especially when only certain accounts can apply changes.

IaC reduces the toil associated with manually provisioning resources in multiple UIs or consoles by providing visibility and consistency in a central location. Tools like Helm or Kustomizer allow you to define and build containerized applications of any complexity. Other IaC tools like Terraform do the same for your cloud infrastructure and CI/CD.

Adopting a GitOps flow means deployments kick-off automatically after check-in of any files that have changed: the container Dockerfile, Terraform configuration, application code, or similar.

Optimize your container builds with layer caching

Use layer caching with Kubernetes to significantly speed up container builds. In Docker, once a layer changes, all downstream layers need to be rebuilt regardless of whether they’ve changed or not. For this reason, frequently changing components should be referenced toward the end of the Dockerfile to avoid rebuilding layers that haven’t changed. This will keep rebuilds to a minimum and speed up container builds.

Look for opportunities to promote container images instead of rebuilding them at each stage of development. For example, rather than creating a container per environment for each service, build a single container with environment-specific configurations for dev, test, and production. This offers small speed gains and increases reliability. Using the same container at each lifecycle stage reduces the chance of issues appearing for the first time in later-stage container rebuilds rather than in development or testing.

Separate your CI/CD Kubernetes cluster from other clusters

Friends don’t let friends build on production clusters. Your production Kubernetes clusters should be reserved for just that—production. There are definite advantages to creating boundaries around isolated ecosystems, and it’s key to minimizing the impact of security breaches.

For example:

- Security policies: Your production environment needs extremely restrictive permissions, especially since it’s likely to have access to sensitive information and systems. You may decide to be more permissive on your build cluster to make debugging easier, though still apply the principle of least privilege, as internal threats in CI/CD are just as critical as external threats.

- Scaling policies: Production clusters usually scale in response to load and memory/CPU utilization, whereas a CI/CD cluster may scale in response to build queue length. You can also be more aggressive when terminating CI/CD cluster resources when they are no longer required.

- Cost tracking/segmentation: Your production workloads are often more closely connected to supporting revenue generation and your customers. Your CI/CD workloads are a level removed from that direct revenue generation and production environment. Equating costs associated with CI/CD workloads can help you directly account for and maximize your investment in your build and deploy pipelines.

Use canary deployments and develop a rollback strategy

Canary deployments are great for maintaining system reliability and uptime. By releasing a new version of an application as a canary to a handful of users, you can understand how it behaves in the real world without affecting the entire user base. Once you've observed and validated the changes are safe, you can continue to drip feed the new version to more and more users. If there are problems, you can redirect users to the stable version with minimal impact.

Canary deployments in Kubernetes are relatively straightforward:

- Create different deployment labels for each release. For example,

stablefor the existing version, andcanaryfor the new version. - Set the number of replicas for each deployment to control the ratio of

stablereleases tocanaryreleases. - If issues in the canary are detected, you can roll back the deployment with kubectl’s

rollout undocommand.

Challenges with CI/CD and Kubernetes

I’ve talked a lot about the benefits of Kubernetes, but there are complexities and challenges to navigate. Building containers in Kubernetes can get really weird really quickly. Docker in Docker (DinD) is difficult to work with. It’s like building a house inside a house and not always being able to open the kitchen cupboard to get what you need to make dinner. The setup can be complicated and introduce multiple performance and security issues. Kaniko is an alternative that makes these things easier to grapple with, as you can run container build steps in userspace instead of the Docker Daemon. This blog post talks more about Docker in Docker, Kaniko, and Buildpacks and how to build containers on containers on Kubernetes.

While it can be challenging to see exactly what’s going on in a Kubernetes pod compared to a virtual machine, adopting observability best practices for CI/CD on Kubernetes can make things easier.

Understanding what’s important to your team, and business will help you to decide if moving CI/CD to Kubernetes is the right decision. One way to get perspective on your CI/CD is to adopt a Site Reliability Engineering (SRE) approach and use metrics to manage your pipelines. Consider defining Service Level Objectives (SLOs) that capture what’s important to users of the system, and measure how they’re tracking with Service Level Indicator (SLIs) metrics. This will allow you to prioritize effort, and measure the effectiveness of your work. Just like your production systems, CI/CD needs to be observable, scalable, reliable, and easy to maintain.

Improving your build scalability

Kubernetes can certainly improve CI/CD reliability, letting you run builds of any complexity at any scale. It’s a worthwhile investment for teams with large, centralized CI/CD pipelines that serve numerous teams with a high deployment capacity. Buildkite is perfect for these sorts of large-scale CI/CD deployments and supports containerized builds for CI/CD pipelines.

Want to see how it works for yourself?

- Sign up for a free Buildkite account.

- Follow our guide to run CI/CD on Kubernetes on any cloud with Buildkite.