There can be only one.

Connor MacLeod, Highlander

Monorepos—we all love them, amirite? Well, maybe some of us love to hate them. But they are popular out there. We see companies of all sizes going all-in on this pattern of storing all their app code in a single repo and making it grow. I won’t bother to rehash the benefits you get from using a monorepo—you can read all about them in this Monorepo CI best practices blog. It spells out the advantages of this practice.

The advantages of colocated code and lack of Git submodules also have some trade-offs when it comes to scalability. When your monorepo has grown to 5GB and takes 4 hours to build, what are your options? As they say in the cloud wars, it's scale or die.

So, what, exactly, does scaling mean for a monorepo? You’re probably familiar with common dimensions of scaling compute or server resources like horizontal vs vertical scale, but when it comes to a monorepo, there are a few different dimensions of scale. Perhaps we should say, as your monorepo scales, there are some dimensions where you start to feel the friction.

Here are a few dimensions where scaling monorepos typically start to cause friction—in no particular order:

- People: As you hire more developers to work in the same monorepo, things start getting crowded, and complicated. Changes stack up, depend on each other in odd and even unintentional ways, and become difficult to debug and detangle when problems arise.

- Time: The more code and changes you’re introducing to your monorepo, the longer it takes to build your growing number of projects, and run your increasing number of tests. Longer build times start a queue-ing effect, where only so many builds (or more technically correct, so many merges to your main branch) can run per hour or day.

- The Speed of Light: This is a constant. You can only push electrons down the wire so fast, and so far. And you can only have so many wires in a bundle before they collapse under their own weight. I’m only half joking. It's practically not just theoretically possible to hit some hard limits of scale when you’ve got all your eggs (code) in one basket (repo).

Challenge 1: People are people so why should it be, having too many of them working in the same repo can work, so awfully?

One issue you can run into when scaling a monorepo is the combination of two common factors for the garden variety MVC monorepo:

- The number of developers making changes.

- The sequential ordering required when modifying your database schema.

I’ve seen this play out in more than one monorepo I’ve worked with. Starting out with a smaller number of developers, and with less need to change your database schema, you can coordinate modifications, and manage breaking schema changes. If one team needs to make a UI change that depends on a database change going in first, they can be sequenced or carefully orchestrated by some conscientious devs working closely together. Likewise, a breaking database change can be communicated and shepherded through the process.

But you reach a point where careful coordination becomes too much to handle, too brittle and error-prone, or coordination between your various teams just plain slows you down. There are a number of Commercial off-the-shelf (COTS) and Open Source tools to help manage your database schema while supporting an ever-increasing number of monorepo contributors. The underlying database or architecture can influence which tool you choose, depending on their support for your flavor of relational database and whether they gel with your primary coding language and your team. Turns out, you can also build your own bespoke tooling to handle this issue too!

As a consummate DIY’er, I’ve built tooling to handle this case, and it didn’t need to be heavyweight or handle every possible combination like a COTS tool would. Building something to cover your precise use case means you can have a custom tool tailored specifically to your needs. In my case, we optimized for DevEx, aiming to keep database schema changes as nonchalant as possible. Developers could write their code, maybe they have a database schema change, maybe they don’t, it wasn’t a big production. We adopted a naming convention: developers created a “normal” DDL file with their schema changes, named it to match their Jira ticket number, and the automation handled the rest.

The lightweight automation was <100 lines of code and batched up the changes, applied them to a pre-production database in a determined order, and re-saved them with new file names representing that order. This way, those DDL files could be consistently applied in the same order, in subsequently closer-to-production environments and databases. It resolved any contention or concerns about “who can make the next schema change?” and made developer’s lives better.

Challenge 2: If your build, it is stuck, and your day should be over, I will be waiting…(time after time)

Exceeding your capacity to build and test each change before it’s merged into your main branch is another issue when working in a growing monorepo. Even with parallel compute resources and relatively short build and test times, there are only so many hours in the day. You can batch changes with something like a merge queue to gather a number of proposed changes—often in the form of Pull Requests or Merge Requests—and build those to validate they don’t break the build. But merge queues as an automated and fully-fledged feature are relatively new. So let’s take a trip down memory lane to look at some older tools that can still help address monorepo scaling issues today.

The precursor to a merge queue, long before there was a GitHub feature, and before I had built my own bespoke version, is the release train. I’ve used release trains at various companies, maybe you’ve seen them yourself, chugga-chugga and choo-choo-ing along. The general idea is that you “hold” the build of your monorepo until enough changes are lined up at the train station to board the build train. Despite a release train being a partly or fully manual way of operating, it can still scale and help you manage a lot of concurrent changes happening in your monorepo for years.

Often the release train is driven by an engineer who becomes an expert in dealing with trains, their operations, and their foibles. I’ve been that engineer, an expert in sorting through changes, bisecting lists of PRs to remove that rowdy passenger from the train, learning the limits of how many passengers could board at once before it became so overloaded that it couldn’t leave the station.

A release train can also scale beyond one or two engineers, to a team of trained operators that are highly specialized, and familiar with the operation of the train. I’ve seen this strategy be successful when applied to a large monorepo with a very high throughput of daily changes. The team of train engineers divided the day’s work into shifts, a handful of hours at a time, deploying incredible numbers of changes on release trains, consistently sustaining that pace, day after day. Operating release trains can be exhausting, focused, and repetitive work. That’s why short shifts for train engineers can be helpful for their overall developer happiness and wellbeing, and keeping them mentally sharp for problem solving train issues and any unruly passengers that may have broken the build.

Release trains can begin to break down when you reach a certain level of scale with the number of changes being made in your monorepo. You’ll likely experience problems similar to what drove you to jump on the release train in the first place: not enough hours in the day for the trains to run, too many passengers trying to get on, and failure (or revert) rates climbing. But a release train, whether run by one or many engineers, can serve you well for quite a long time before you need to shift tactics.

Challenge 3: With so many light years to go, and bugs to be found…

One Monday morning, I showed up for my first day at a new workplace. My boss greeted me with the news that the company had split their monorepo in two the Friday before I started. And the lead engineer doing the split had shipped it to prod that same Friday afternoon, without telling anyone ahead of time. Surprise! At least, in this case, most things still worked. There was minimal downtime, and no one had to work the weekend 😂. So what had very recently been a large monorepo was now two not-so-large monorepos–a backend monorepo and a frontend monorepo.

Naturally, I had some questions:

- Do we now have to coordinate things between the frontend monorepo and the backend monorepo?

- Do all the developers have to clone two whole git repos now?

- Do we now have:

- Twice the monorepos?

- Twice the duplicated code?

- Twice the work to maintain them?

The answers were yes, yes, and no, no, no.

You see, sometimes you hit scaling limits that are really, really expensive to try and overcome while still maintaining your single monorepo. That’s ok! Not everyone has a pile of money lying around to buy some other company that makes an optimized file system capable of building your entire monorepo in under 5 minutes. A monorepo as an architectural decision is a good idea in some cases and for some reasons, but it isn’t a religious movement that you have to adhere to or be excommunicated from. Sometimes bifurcating the monorepo along logical boundaries is the right decision and makes the most sense. The side benefits are that two “cloned” monorepos that originated from the same single monorepo are actually pretty easy to work with for both you and your developer population. They mostly operate the same—you’ve got tooling that handles them already, and everyone is used to the scale and knows where most of the sharp edges are. You’re not doubling work, and actually gain some efficiencies by having this familiarity in place across both your monorepos. And don’t forget, another side effect can be an up-to 50% drop in the number of developers contributing to each monorepo, buying you more time as you keep growing and scaling.

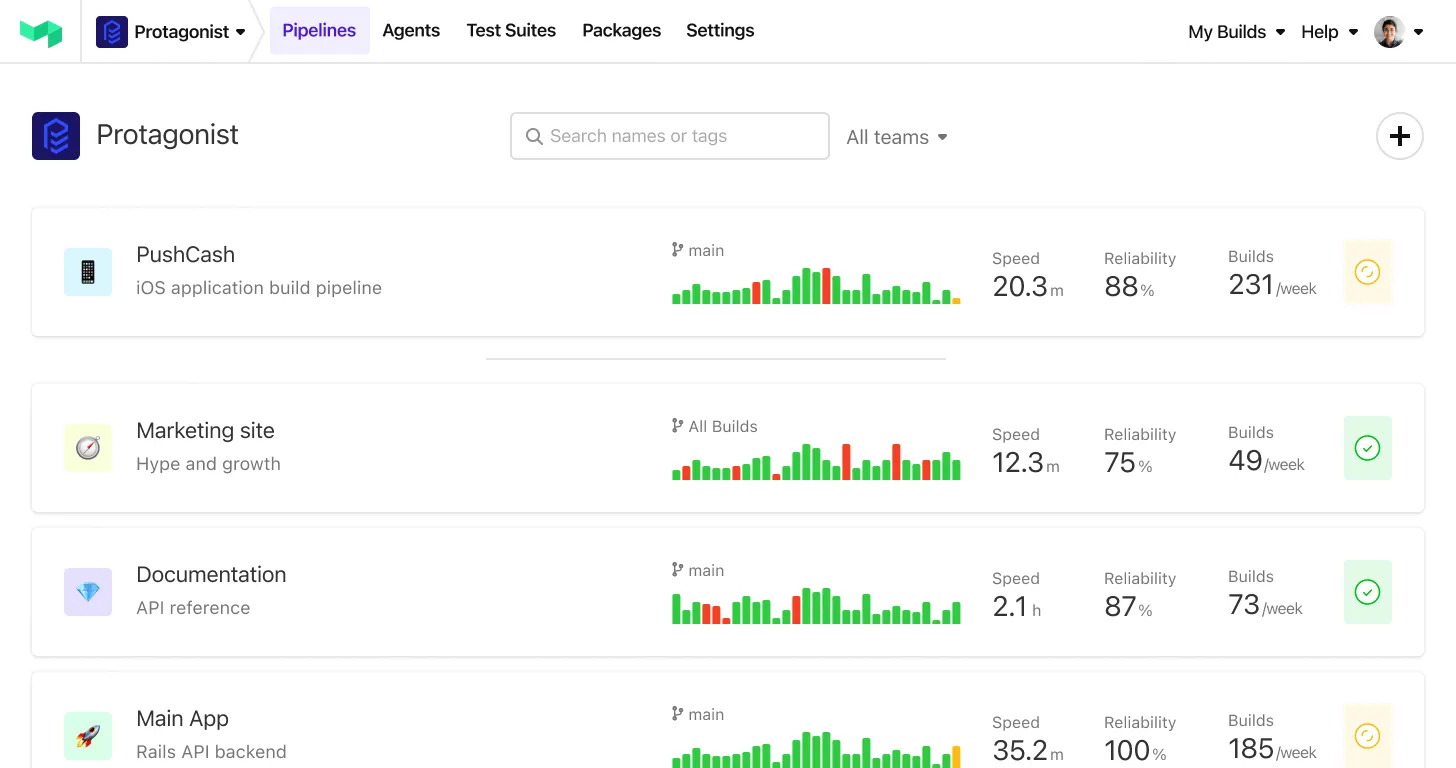

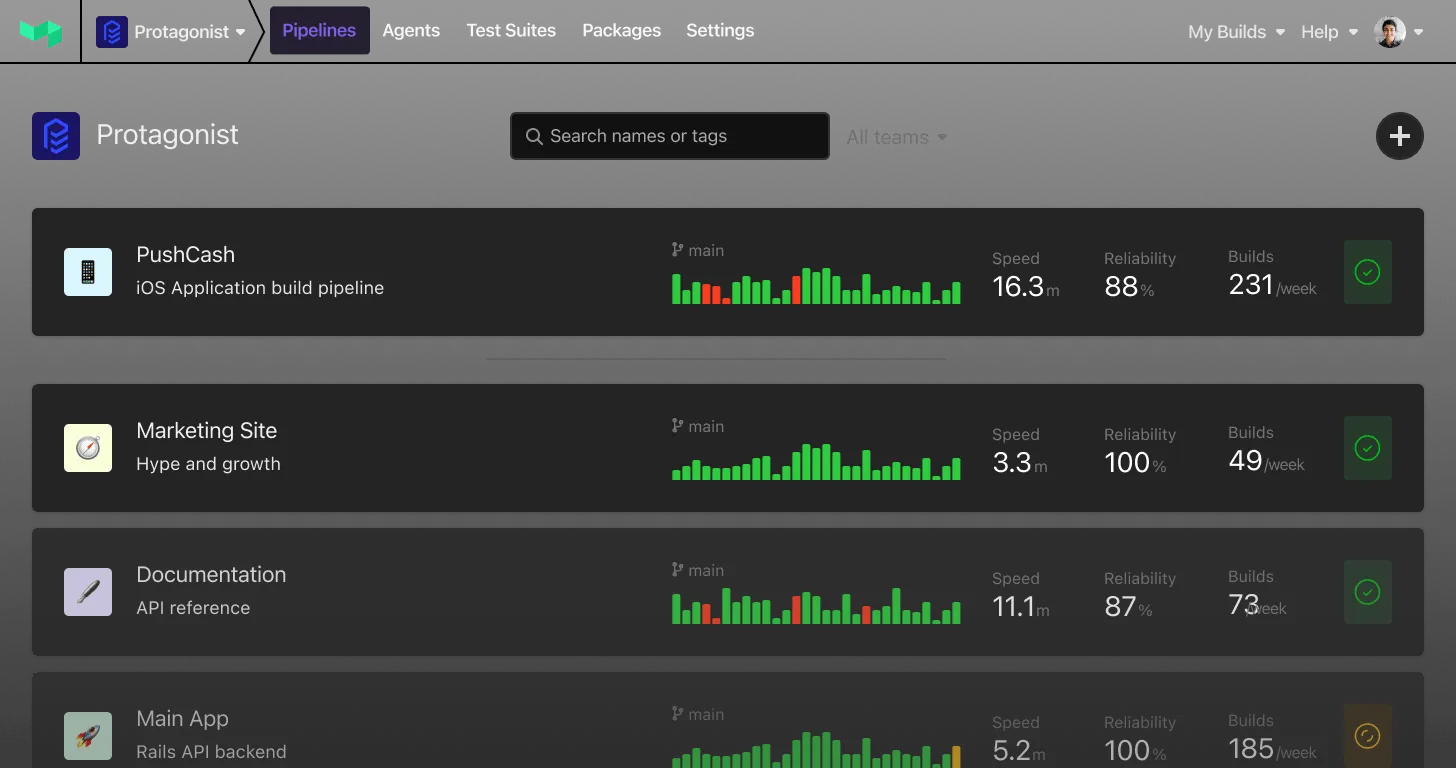

Reminder: Why can’t we give metrics that one more chance?

When scaling CI for your monorepo, regardless of the challenges you face and how you solve them, measurement is your friend. It’s easy to invest a bunch of time, energy, and lines of code in automation to try to improve things. We know our developers will appreciate that hard work. But showing the tangible benefits with quantitative and qualitative metrics will be even better for you, your developers, and your leadership (especially any skeptics!).

The metrics you choose will often be similar to other teams or organizations, but there is no one-size-fits-all measurement for improving your monorepo. The measure to move the needle is typically associated with the greatest pain point:

- Not enough time in the day for release trains to leave the station.

- Too many merge queues to build.

- Adding developers causes more conflicts and resource contention.

- A multi-hour-long pipeline that can’t parallelize anymore than it already has.

For each of these, you’ll want to take a baseline and measure again after you’ve applied each improvement.

But in this world of logic and math, don’t discount qualitative measures, either. Developer happiness and morale are important. If you run a million release trains a day and improve your merge queue pass rate by 30% but have no love for your users, you gain nothing. Surveying users to get their perspective and a read on their experience using your monorepo’s CI pipeline can provide valuable insights. Sometimes, you’ll find that they will be better served by addressing other issues, such as UX or the troubleshooting experience, rather than an across-the-board speedup that our engineer logic brains on paper might find more impressive.

Conclusion: Who wants (their monorepo) to live forever?

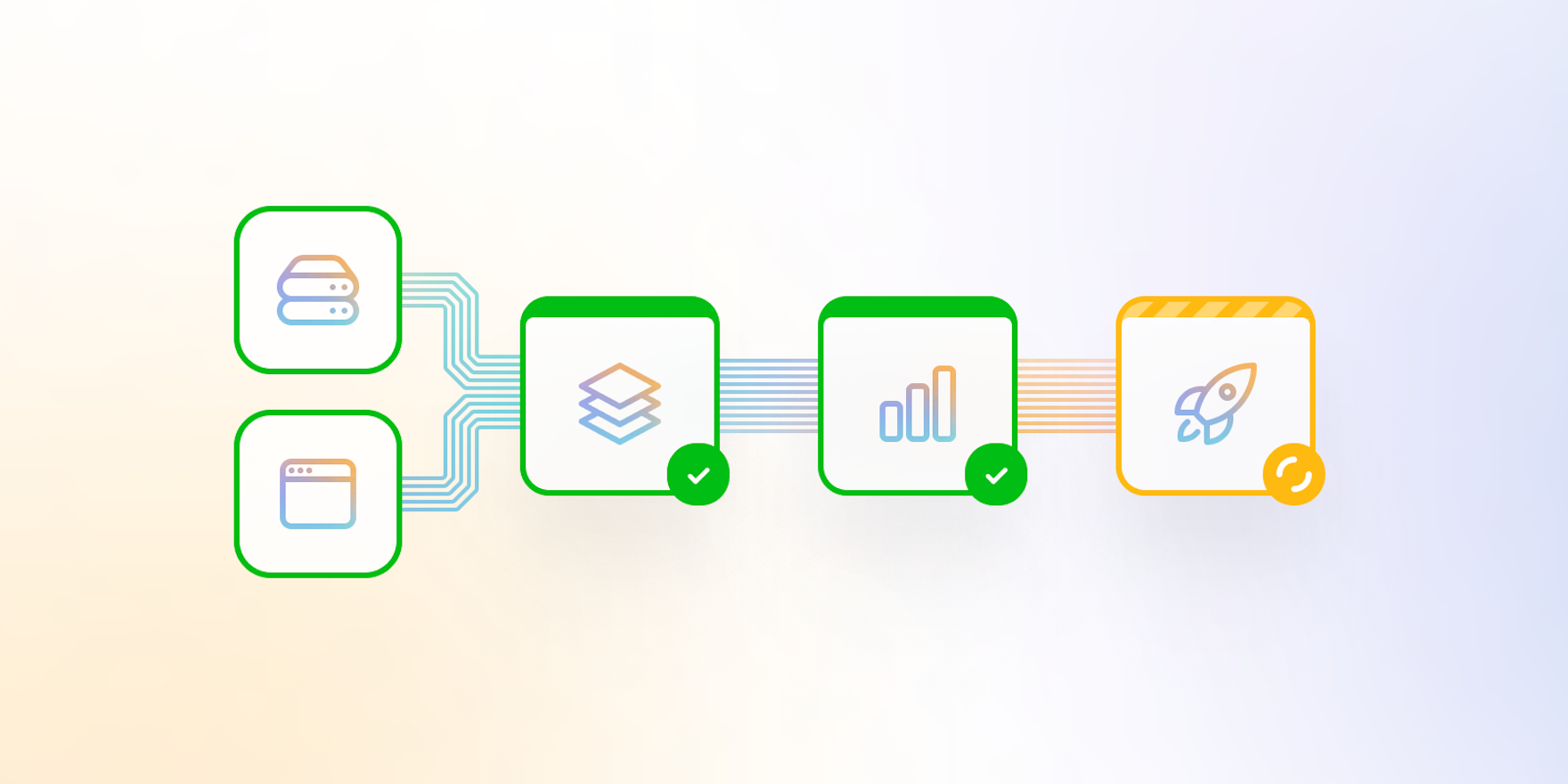

I’ve seen many Buildkite customers succeed with monorepos of varying sizes and complexity. Buildkite offers some great building blocks to help scale your monorepo CI pipelines and delight your users.

I regularly recommend the Buildkite monorepo plugin to provide straightforward build avoidance. And for more complex cases, dynamic pipelines let you write the exact code you need to scale CI for your unique monorepo. You also have the option to split your monorepo’s CI into multiple steps OR pipelines, which can be triggered and run with unlimited concurrency (only your wallet or cloud spend budget is the limit). This flexibility lets customers of different stripes split their pipelines and workloads in the way that makes the most sense for them, rather than being forced into the confines of doing it “the <insert_vendor_name> way.”

You can sign up for a free trial of Buildkite today to test drive your pipeline to see how it fits and scales along with your monorepo, all the while keeping your source code safe and secure on your network and compute infrastructure. It’s as simple as launching a cloudformation template on AWS, and you’ve got autoscaling workers to leverage powerful parallelization and build avoidance in pursuit of alleviating your trickiest monorepo problems!